DBSCAN(Density-Based Spartial Clustering of Applications with Noise)

높은 density를 가진 core samples를 찾아내고 그로부터 cluster를 확장시켜나가는 방법

1. Algorithm

$\epsilon$-neighborhood

한 sample로부터 epsilon 거리 이내의 지역

Core instance(point, sample)

$\epsilon$-neighborhood에 속한 sample 수가 min_samples 이상인 sample

- Core instance와 $\epsilon$-neighborhood 내부의 sample들을 같은 cluster로 지정

- Sample이 다수의 core instance의 $\epsilon$-neighborhood 내부에 있을 경우, 모두 같은 cluster로 지정

- Cluster에 포함되지 않는 sample은 outlier로 판단

2. Features

- 중요 parameter가 적음(

epsilon($\epsilon$),min_samples($MinPts$)) - Cluster의 모양과 크기에 구애받지 않음

- Outlier에 안정적

- Cluster 간의 밀집도가 크게 다르면 잘 작동하지 않음

→ OPTICS가 해결 - Time complexity: $O(n \log n)$

Space complexity: $O(n^2)$ (scikit-learn implementation)

3. OPTICS

OPTICS(Ordering Points To Identify the Clustering Structure)

epsilon을 고정시키지 않고 cluster 계층을 유지시키는 DBSCAN의 일종

Core distance

$\epsilon$-neighborhood에 속한 sample들 중 min_samples번째 가까운 sample 과의 거리

Reachability distance

3.1 Algorithm

function OPTICS(DB, eps, MinPts) is

for each point p of DB do

p.reachability-distance = UNDEFINED

for each unprocessed point p of DB do

N = getNeighbors(p, eps)

mark p as processed

output p to the ordered list

if core-distance(p, eps, MinPts) != UNDEFINED then

Seeds = empty priority queue

update(N, p, Seeds, eps, MinPts)

for each next q in Seeds do

N' = getNeighbors(q, eps)

mark q as processed

output q to the ordered list

if core-distance(q, eps, MinPts) != UNDEFINED do

update(N', q, Seeds, eps, MinPts)

function update(N, p, Seeds, eps, MinPts) is

coredist = core-distance(p, eps, MinPts)

for each o in N

if o is not processed then

new-reach-dist = max(coredist, dist(p,o))

if o.reachability-distance == UNDEFINED then // o is not in Seeds

o.reachability-distance = new-reach-dist

Seeds.insert(o, new-reach-dist)

else // o in Seeds, check for improvement

if new-reach-dist < o.reachability-distance then

o.reachability-distance = new-reach-dist

Seeds.move-up(o, new-reach-dist)

3.2 DBSCAN vs OPTICS

Demo of OPTICS clustering algorithm 참고

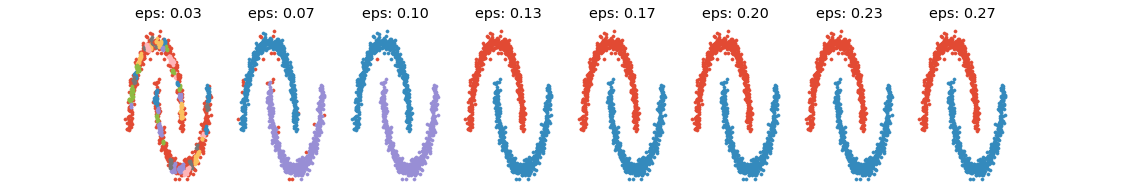

4. Code

import numpy as np

import pandas as pd

import matplotlib.pyplot as plt

plt.style.use('ggplot')

from sklearn.cluster import DBSCAN

from sklearn.datasets import make_moons

from sklearn.metrics import silhouette_score

X, y = make_moons(n_samples=1000, noise=0.05)

scores = pd.Series(dtype=np.float32)

fig, axes = plt.subplots(1, 10, figsize=(20, 3))

for ax, eps in zip(axes.flat, np.linspace(0, 0.3, 10)):

try:

dbscan = DBSCAN(eps=eps, min_samples=5)

dbscan.fit(X)

clusters = dbscan.labels_ # -1: outlier

idx_core_samples = dbscan.core_sample_indices_

core_samples = dbscan.components_

scores.loc[eps] = silhouette_score(X[clusters >= 0], clusters[clusters >= 0])

ax.set_title(f"eps: {eps:.2f}")

for cluster in np.unique(clusters):

idxs = np.where(clusters == cluster)[0]

ax.scatter(X[idxs, 0], X[idxs, 1], s=10)

except Exception as e:

pass

ax.axis('off')

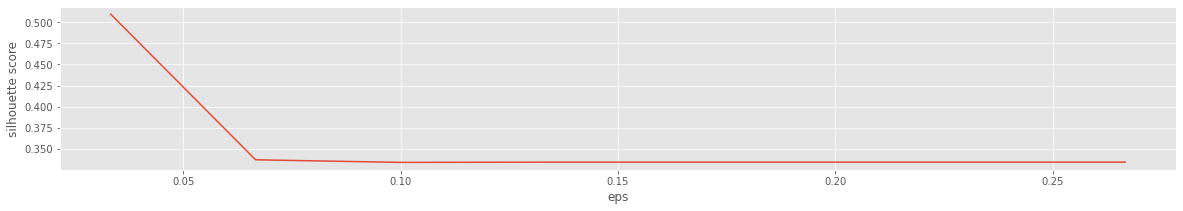

plt.show()

scores.plot(figsize=(20, 3), xlabel='eps', ylabel='silhouette score');

※ CAUTION!

Cluster가 구형이 아닐수록 silhouette score는 의미를 잃는다

eps = 0.15

dbscan = DBSCAN(eps=eps, min_samples=5)

dbscan.fit(X)

clusters = dbscan.labels_ # -1: outlier

idx_core_samples = dbscan.core_sample_indices_

core_samples = dbscan.components_

predict()가 없기 때문에 다른 classifier를 사용하여 새로운 data에 대하여 예측을 수행한다.

from sklearn.neighbors import KNeighborsClassifier

knn = KNeighborsClassifier(n_neighbors=50)

knn.fit(dbscan.components_, dbscan.labels_[dbscan.core_sample_indices_])

X_new = np.array([[-0.5, 0], [0, 0.5], [1, -0.1], [2, 1]])

preds = knn.predict(X_new)

preds_prob = knn.predict_proba(X_new)

preds, preds_prob

(array([0, 1, 0, 1]),

array([[0.82, 0.18],

[0. , 1. ],

[0.8 , 0.2 ],

[0. , 1. ]]))

PREVIOUSEtc